Glossary

Adjusted : A method for estimating test error rate from the training error rate. Adjusted is a popular choice for comparing models with differing numbers of variables. Recall that is defined as

where TSS is the total sum of squares given by

Since the residual sum of squares always decreases given more variables, will always increase given more variables.

For a least squares fitted model with predictors, adjusted is given by

Unlike Cp, Akaike information criterion, and Bayes information criterion where a smaller value reflects lower test error, for adjusted a larger value signifies a lower test error.o

Maximizing adjusted is equivalent to minimizing Because appears in the denominator, the number of variables may increase or decrease the value of

Adjusted aims to penalize models that include unnecessary variables. This stems from the idea that after all of the correct variables have been added, adding additional noise variables will only decrease the residual sum of squares slightly. This slight decrease is counteracted by the presence of in the denominator of

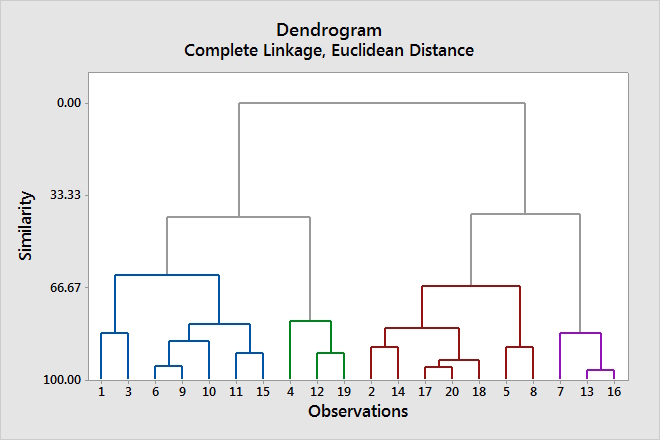

Agglomerative Clustering: The most common type of hierarchical clustering in which the dendrogram is built starting from the [terminal nodes][#terminal-nodes] and combining in clusters up to the trunk.

Clusters can be extracted from the dendrogram by making a horizontal cut across the dendrogram and taking the distinct sets of observations below the cut. The height of the cut to the dendrogram serves a similar role to in K-means clustering: it controls the number of clusters yielded.

Akaike Information Criterion: A method for estimating test error rate from the training error rate. The Akaike information criterion (AIC) is defined for a large class of models fit by maximum likelihood. In the case of simple linear regression, when errors follow a Gaussian distribution, maximum likelihood and least squares are the same thing, in which case, AIC is given by

This formula omits an additive constant, but even so it can be seen that Cp and AIC are proportional for least squares models and as such AIC offers no benefit in this case.

Backfitting: A method of fitting a model involving multiple parameters by repeatedly updating the fit for each predictor in turn, holding the others fixed. This approach has the benefit that each time a function is updated the fitting method for a variable can be applied to a partial residual. Backfitting can be used by generalized additive models in situations where least squares cannot be used.

A partial residual is the remainder left over after subtracting the products of the fixed variables and their respective coefficients from the response. This residual can be used as a response in a non-linear regression of the variables being updated.

For example, given a model of

a residual for could be computed as

The yielded residual can then be used as a response in order to fit in a non linear regression on

Backward Selection: A variable selection method that begins with a model that includes all the predictors and proceeds by removing the variable with the highest p-value each iteration until some stopping condition is met. Backward selection cannot be used when

Backward Stepwise Selection: A variable selection method that starts with the full least squares model utilizing all predictors and iteratively removes the least useful predictor with each iteration.

An algorithm for backward stepwise selection:

-

Let denote a model using all predictors.

- For

- Consider all models that use predictors from

- Choose the best of these models as determined by the smallest RSS or highest Call this model

- Select the single best model from using cross-validated prediction error, Cp (Akaike information criterion), Bayes information criterion, or adjusted .

Like forward stepwise selection, backward stepwise selection searches through only models, making it useful in scenarios where is too large for best subset selection. Like forward stepwise selection, backward stepwise selection is not guaranteed to yield the best possible model.

Unlike forward stepwise selection, backward stepwise selection requires that the number of samples, , is greater than the number of variables, so the full model with all predictors can be fit.

Both forward stepwise selection and backward stepwise selection perform a guided search over the model space and effectively consider substantially more than models.

Bagging: Bootstrap aggregation, or bagging, is a general purpose procedure for reducing the variance of statistical learning methods that is particularly useful for decision trees.

Formally, bagging aims to reduce variance by calculating using separate training sets created using bootstrap resampling, and averaging the results of the functions to obtain a single, low-variance statistical learning model given by

Bagging can improve predictions for many regression methods, but it’s especially useful for decision trees.

Bagging is applied to regression trees by constructing regression trees using bootstrapped training sets and averaging the resulting predictions. The constructed trees are grown deep and are not pruned. This means each individual tree has high variance, but low bias. Averaging the results of the trees reduces the variance.

In the case of classification trees, a similar approach can be taken, however instead of averaging the predictions, the prediction is determined by the most commonly occurring class among the predictions or the mode value.

The number of trees, is not a critical parameter with bagging. Picking a large value for will not lead to overfitting. Typically, a value of is chosen to ensure the variance and error rate of settled down.

Basis Function Approach: Polynomial and piecewise constant functions are special cases of a basis function approach. The basis function approach utilizes a family of functions or transformations that can be applied to a variable

Instead of fitting a linear model in a similar model that applies the fixed and known basis functions to is used:

For polynomial regression, the basis functions are For piecewise constant functions the basis functions are

Since the basis function model is just linear regression with predictors least squares can be used to estimate the unknown regression coefficients. Additionally, all the inference tools for linear models like standard error for coefficient estimates and F-statistics for overall model significance can also be employed in this setting.

Many different types of basis functions exist.

Bayes Classifier: A very simple classifier that assigns each observation to the most likely class given its predictor variables.

In Bayesian terms, an observation should be classified for the predictor vector to the class for which

is largest. That is, the class for which the conditional probability that , given the observed predictor vector is largest.

Bayes Decision Boundary: The threshold inherent to a two-class Bayes classifier where the classification probability is exactly 50%.

Bayes Error Rate: The Bayes classifier yields the lowest possible test error rate since it will always choose the class with the highest probability. The Bayes error rate can be stated formally as

The Bayes error rate can also be described as the ratio of observations that lie on the “wrong” side of the decision boundary.

Bayes Information Criterion: A method for estimating test error rate from the training error rate.

For least squares models with predictors, the Bayes information criterion (BIC), excluding a few irrelevant constants, is given by

Similar to Cp, Bayes information criterion tends to take on smaller values when test MSE is low, so smaller values of BIC are preferable.

Bayes information criterion replaces the penalty imposed by Cp with a penalty of where n is the number of observations. Because is greater than 2 for the BIC statistic tends to penalize models with more variables more heavily than Cp, which in turn results in the selection of smaller models.

Bayes Theorem: Describes the probability of an event, based on prior knowledge of conditions that might be related to the event. Also known as Bayes’ law or Bayes’ rule.

Bayes’ theorem is stated mathematically as

where and are events and is greater than zero.

Best Subset Selection: A variable selection method that involves fitting a separate least squares regression for each of the possible combinations of predictors and then selecting the best model.

Selecting the “best” model is not a trivial process and usually involves a two-step procedure, as outlined by the algorithm below:

-

Let denote the null model which uses no predictors and always yields the sample mean for predictions.

- For

- Fit all models that contain exactly predictors.

- Let denote the model that yields the smallest RSS or equivalently the largest

- Select the best model from using cross-validated prediction error, Cp (Akaike information criterion), Bayes information criterion, or adjusted .

It should be noted that step 3 of the above algorithm should be performed with care because as the number of features used by the models increases, the RSS decreases monotonically and the increases monotonically. Because of this, picking the statistically best model will always yield the model involving all of the variables. This stems from the fact that RSS and are measures of training error and it’d be better to select the best model based on low test error. For this reason, step 3 utilizes cross-validated prediction error, Cp, BIC, or adjusted R^{2} to select the best models.

Best subset selection has computational limitations since models must be considered. As such, best subset selection becomes computationally infeasible for values of greater than ~40.

Bias: The error that is introduced by approximating a potentially complex function using a simple model. More flexible models tend to have less bias.

Bias-Variance Trade-Off: The relationship between bias, variance, and test mean squared error, called a trade-off because it is a challenge to find a model that has a low test mean squared error and both a low variance and a low squared bias.

Boosting: A decision tree method similar to bagging, however, where as bagging builds each tree independent of the other trees, boosting trees are grown using information from previously grown trees. Boosting also differs in that it does not use bootstrap sampling. Instead, each tree is fit to a modified version of the original data set. Like bagging, boosting combines a large number of decision trees,

Each new tree added to a boosting model is fit to the residuals of the model instead of the response,

Each new decision tree is then added to the fitted function to update the residuals. Each of the trees can be small with just a few terminal nodes, determined by the tuning parameter,

By repeatedly adding small trees based on the residuals, will slowly improve in areas where it does not perform well.

Boosting has three tuning parameters:

- the number of trees. Unlike bagging and random forests, boosting can overfit if is too large, although overfitting tends to occur slowly if at all. Cross validation can be used to select a value for

- the shrinkage parameter, a small positive number that controls the rate at which the boosting model learns. Typical values are or depending on the problem. Very small values of can require a very large value for in order to achieve good performance.

- the number of splits in each tree, which controls the complexity of the boosted model. Often works well, in which case each tree is a stump consisting of one split. This approach yields an additive model since each involves only a single variable. In general terms, is the interaction depth of the model and it controls the interaction order of the model since splits can involve at most variables.

With boosting, because each tree takes into account the trees that came before it, smaller trees are often sufficient. Smaller trees also aid interpretability.

An algorithm for boosting regression trees:

- Set and for all in the training set.

- For repeat:

- Fit a tree with splits ( terminal nodes) to the training data (X, r)

-

Update by adding a shrunken version of the new tree:

-

Update the residuals:

-

Output the boosted model,

Bootstrap: A widely applicable resampling method that can be used to quantify the uncertainty associated with a given estimator or statistical learning approach, including those for which it is difficult to obtain a measure of variability.

The bootstrap generates distinct data sets by repeatedly sampling observations from the original data set. These generated data sets can be used to estimate variability in lieu of sampling independent data sets from the full population.

The sampling employed by the bootstrap involves randomly selecting observations with replacement, which means some observations can be selected multiple times while other observations are not included at all.

This process is repeated times to yield bootstrap data sets, which can be used to estimate other quantities such as standard error.

For example, the estimated standard error of an estimated quantity can be computed using the bootstrap as follows:

Branch: A segment of a decision tree that connect two nodes.

Classification Problem: A class of problem that is well suited to statistical techniques for determining if an observation is a member of a particular class or which of a number of classes the observation belongs to.

Classification Tree: A type of decision tree that is similar to a regression tree, however it is used to predict a qualitative response. For a classification tree, predictions are made based on the notion that each observation belongs to the most commonly occurring class of the training observations in the region to which the observation belongs.

When growing a tree, the Gini index or cross-entropy are typically used to evaluate the quality of a particular split since both methods are more sensitive to node purity than classification error rate is.

When pruning a classification tree, any of the three measures can be used, though classification error rate tends to be the preferred method if the goal of the pruned tree is prediction accuracy.

Compared to linear models, decision trees will tend to do better in scenarios where the relationship between the response and the predictors is non-linear and complex. In scenarios where the relationship is well approximated by a linear model, an approach such as linear regression will tend to better exploit the linear structure and outperform decision trees.

Cluster Analysis: The task of grouping a set of observations in such a way that the observations in the same group or cluster are more similar, in some sense, to each other than those observations in other groups or clusters.

Coefficient: A number or symbol representing a number that is multiplied with a variable or an unknown quantity in an algebraic term.

Collinearity: The situation in which two or more predictor variables are closely related to one another.

Collinearity can pose problems for linear regression because it can make it hard to determine the individual impact of collinear predictors on the response.

Collinearity reduces the accuracy of the regression coefficient estimates, which in turn causes the standard error of to grow. Since the T-statistic for each predictor is calculated by dividing by its standard error, collinearity results in a decline in the true T-statistic. This may cause it to appear that and are related to the response when they are not. As such, collinearity reduces the effectiveness of the null hypothesis. Because of all this, it is important to address possible collinearity problems when fitting the model.

One way to detect collinearity is to generate a correlation matrix of the predictors. Any element in the matrix with a large absolute value indicates highly correlated predictors. This is not always sufficient though, as it is possible for collinearity to exist between three or more variables even if no pair of variables have high correlation. This scenario is known as multicollinearity.

Confidence Interval: A range of values such that there’s an X% likelihood that the range will contain the true, unknown value of the parameter. For example, a 95% confidence interval is a range of values such that there’s a 95% chance that the range contains the true unknown value of the parameter.

Confounding: In general, the scenario in which the result obtained with a single predictor does not match the result with multiple predictors, especially when there is correlation among the predictors. More specifically, confounding describes situations in which the experimental controls do not adequately allow for ruling out alternative explanations for the observed relationship between the predictors and the response.

Correlation: A measure of the linear relationship between and calculated as

Cost Complexity Pruning: A strategy for pruning decision trees that reduces the possibility space to a sequence of trees indexed by a non-negative tuning parameter,

For each value of there corresponds a subtree, such that

where indicates the number of terminal nodes in the tree is the predictor region corresponding to the mth terminal node and is the predicted response associated with (the mean of the training observations in

The tuning parameter acts as a control on the trade-off between the subtree’s complexity and its fit to the training data. When is zero, then the subtree will equal since the training fit is unaltered. As increases, the penalty for having more terminal nodes increases, resulting in a smaller subtree.

As increases from zero, the pruning process proceeds in a nested and predictable manner which makes it possible to obtain the whole sequence of subtrees as a function of easily.

Also known as weakest link pruning.

Cp: Cp, or Mallow’s Cp, is a tool for estimating test error rate from the training error rate. For a model containing predictors fitted with least squares, the Cp estimate of test mean squared error is calculated as

where is an estimate of the variance of the error associated with each response measurement. In essence, the Cp statistic adds a penalty of to the training residual sum of squares to adjust for the tendency for training error to underestimate test error and adjust for additional predictors.

It can be shown that if is an unbiased estimate of , then Cp will be an unbiased estimate of test mean squared error. As a result, Cp tends to take on small values when test mean square error is low, so a model with a low Cp is preferable.

Cp and Akaike information criterion are proportional for least squares models and as such AIC offers no benefit over Cp in such a scenario.

Cross Entropy: Borrowed from information theory, cross-entropy can be used as a function to determine classification error rate in the context of classification trees. Formally defined as

Since must always be between zero and one it reasons that Like the Gini index, cross-entropy will take on a small value if the mth region is pure.

Cross Validation: A resampling method that can be used to estimate a given statistical methods test error or to determine the appropriate amount of flexibility.

Cross validation can be used both to estimate how well a given statistical learning procedure might perform on new data and to estimate the minimum point in the estimated test mean squared error curve, which can be useful when comparing statistical learning methods or when comparing different levels of flexibility for a single statistical learning method.

Cross validation can also be useful when is qualitative, in which case the number of misclassified observations is used instead of mean squared error.

Curse of Dimensionality: Refers to various phenomena that arise when analyzing and organizing data in high-dimensional settings that do not occur in low-dimensional settings. The common theme of these problems is that when the dimensionality increases, the volume of the space increases so fast that the available data become sparse which can be problematic for any method that requires statistical significance because the amount of data needed to support a statistically sound and reliable result often grows exponentially with the dimensionality.

Decision Tree: A tree-like structure made from stratifying or segmenting the predictor space into a number of simple regions. These structures are referred to as trees because the splitting rules used to segment the predictor space can be summarized in a tree that is typically drawn upside down with the leaves or terminal nodes at the bottom of the tree.

Decision Tree Methods: Also known as tree-based methods. Strategies for stratifying or segmenting the predictor space into a number of simple regions. Predictions are then made using the mean or mode of the training observations in the region to which the predictions belong. These methods are referred to as trees because the splitting rules used to segment the predictor space can be summarized in a tree.

Though tree-based methods are simple and useful for interpretation, they typically aren’t competitive with the best supervised learning techniques. Because of this, approaches such as bagging, random forests, and boosting have been developed to produce multiple trees which are then combined to yield a since consensus prediction. Combining a large number of trees can often improve prediction accuracy at the cost of some loss in interpretation.

Degrees of Freedom: A numeric value that quantifies the number of values in the model that are free to vary. The degrees of freedom is a quality that summarizes the flexibility of a curve.

Dendrogram: A tree diagram, frequently used in the context of hierarchical clustering.

Dendrograms are attractive because a single dendrogram can be used to obtain any number of clusters.

Often people will look at the dendrogram and select a sensible number of clusters by eye, depending on the heights of the fusions and the number of clusters desired. Unfortunately, the choice of where to cut the dendrogram is not always evident.

Density Function: A function whose value for any given sample in the sample space (the set of possible values taken by the random variable) can be interpreted as providing a relative likelihood that the value of the random variable would equal that sample.

The density function of for an observation that comes from the kth class is defined as

This means that should be relatively large if there’s a high probability that an observation from the kth class features Conversely, will be relatively small if it is unlikely that an observation in class k would feature

Dimension Reduction Methods: A class of techniques that transform the predictors and then fit a least squares model using the transformed variables instead of the original predictors.

Let represent linear combinations of the original predictors, Formally,

For some constants , It is then possible to use least squares to fit the linear regression model:

where and the regression coefficients are represented by

If the constants are chosen carefully, dimension reduction approaches can outperform least squares regression of the original predictors.

The term dimension reduction references the fact that this approach reduces the problem of estimating the coefficients where there by reducing the dimension of the problem from to

All dimension reduction methods work in two steps. First, the transformed predictors, are obtained. Second, the model is fit using the transformed predictors.

The difference in dimension reduction methods tends to arise from the means of deriving the transformed predictors, or the selection of the coefficients.

Two popular forms of dimension reduction are principal component analysis and partial least squares.

Discriminant Analysis: An alternative to regression analysis applied to discrete dependent variables and concerned with separating sets of observed values into classes.

Dummy Variable: A derived variable taking on a value of 0 or 1 to indicate membership in some mutually exclusive category or class. Multiple dummy variables can be used in conjunction to model classes with more than two possible values. Similar to and often used interchangeably with the term indicator variable. Dummy variables make it easy to mix quantitative and qualitative predictors.

An example dummy variable encoding:

When dummy variables are used to model classes with more than two possible values, the number of dummy variables required will always be one less than the number of values that the predictor can take on.

For example, with a predictor that can take on three values, the following coding could be used:

F-Distribution: A continuous probability distribution that arises frequently as the null distribution of a test statistic, most notably in the analysis of variance.

F-Statistic: A test statistic which adheres to an F-distribution under the null hypothesis which is useful to assess model fit.

The F-statistic can be computed as

where, again,

and

If the assumptions of the linear model, represented by the alternative hypothesis, are true it can be shown that

Conversely, if the null hypothesis is true, it can be shown that

This means that when there is no relationship between the response and the predictors the F-statisitic takes on a value close to

Conversely, if the alternative hypothesis is true, then the F-statistic will take on a value greater than

When is large, an F-statistic only slightly greater than may provide evidence against the null hypothesis. If is small, a large F-statistic is needed to reject the null hypothesis.

Forward Selection: A variable selection method that begins with a null model, a model that has an intercept but no predictors, and attempts simple linear regressions, keeping whichever predictor results in the lowest residual sum of squares. In this fashion, the predictor yielding the lowest residual sum of squares is added to the model one-by-one until some halting condition is met. Forward selection is a greedy process that may include extraneous variables.

Forward Stepwise Selection: A variable selection method that begins with a model that utilizes no predictors and successively adds predictors one-at-a-time until the model utilizes all the predictors. Specifically, the predictor that yields the greatest additional improvement is added to the model at each step.

An algorithm for forward stepwise selection is outlined below:

-

Let denote the null model that utilizes no predictors.

- For

- Consider all models that augment the predictors of with one additional parameter.

- Choose the best model that yields the smallest RSS or largest and call it

- Select a single best model from the models, using cross-validated prediction error, Cp (Akaike information criterion), Bayes information criterion, or adjusted .

Forward stepwise selection involves fitting one null model and models for each iteration of This amounts to models which is a significant improvement over best subset selection’s models.

Forward stepwise selection may not always find the best possible model out of all models due to its additive nature. For example, forward stepwise selection could not find the best 2-variable model in a data set where the best 1-variable model utilizes a variable not used by the best 2-variable model.

Forward stepwise selection is the only variable selection method that can be applied in high-dimensional scenarios where however it can only construct submodels due to the reliance on least squares regression.

Both forward stepwise selection and backward stepwise selection perform a guided search over the model space and effectively consider substantially more than models.

Gaussian Distribution: A theoretical frequency distribution represented by a normal curve or bell curve. Also known as a normal distribution.

Generalized Additive Model: A general framework for extending a standard linear model by allowing non-linear functions of each of the predictors while maintaining additivity. Generalized additive models can be applied with both quantitative and qualitative models.

One way to extend the multiple linear regression model

to allow for non-linear relationships between each feature and the response is to replace each linear component, with a smooth non-linear function which would yield the model

This model is additive because a separate is calculated for each and then added together.

The additive nature of GAMs makes them more interpretable than some other types of models.

GAMs allow for using the many methods of fitting functions to single variables as building blocks for fitting an additive model.

Backfitting can be used by GAMs in situations where least squares cannot be used.

Gini Index: A measure of the total variance across K classes defined as

where represents the proportion of the training observations in the mth region that are from the kth class.

The Gini index can be viewed as a measure of region purity as a small value indicates the region contains mostly observations from a single class.

Often used as a function for assessing classification error rate in the context of classification trees.

Heteroscedasticity: A characteristic of a collection of random variables in which there are sub-populations that have different variability from other sub-populations. Heteroscedasticity can lead to regression models that seem stronger than they really are since standard errors, confidence intervals, and hypothesis testing all assume that error terms have a constant variance.

Hierarchical Clustering: A clustering method that generates clusters by first building a dendrogram then obtains clusters by cutting the dendrogram at a height that will yield a desirable set of clusters.

The hierarchical clustering dendrogram is obtained by first selecting some sort of measure of dissimilarity between the each pair of observations; often Euclidean distance is used. Starting at the bottom of the dendrogram, each of the observations is treated as its own cluster. With each iteration, the two clusters that are most similar are merged together so there are clusters. This process is repeated until all the observations belong to a single cluster and the dendrogram is complete.

The dissimilarity between the two clusters that are merged indicates the height in the dendrogram at which the fusion should be placed.

One issue not addressed is how clusters with multiple observations are compared. This requires extending the notion of dissimilarity to a pair of groups of observations. Linkage defines the dissimilarity between two groups of observations.

The term hierarchical refers to the fact that the clusters obtained by cutting the dendrogram at the given height are necessarily nested within the clusters obtained by cutting the dendrogram at any greater height.

The hierarchical structure assumption is not always valid. For example, splitting a group of people in to sexes and splitting a group of people by race yield clusters that aren’t necessarily hierarchical in structure. Because of this, hierarchical clustering can sometimes yield worse results than K-means clustering.

Hierarchical Principle: A guiding philosophy that states that, when an interaction term is included in the model, the main effects should also be included, even if the p-values associated with their coefficients are not significant. The reason for this is that is often correlated with and and removing them tends to change the meaning of the interaction.

If is related to the response, then whether or not the coefficient estimates of or are exactly zero is of limited interest.

High-Dimensional: A term used to describe scenarios where there are more features than observations.

High Leverage: In contrast to outliers which relate to observations for which the response is unusual given the predictor , observations with high leverage are those that have an unusual value for the predictor for the given response

High leverage observations tend to have a sizable impact on the estimated regression line and as a result, removing them can yield improvements in model fit.

For simple linear regression, high leverage observations can be identified as those for which the predictor value is outside the normal range. With multiple regression, it is possible to have an observation for which each individual predictor’s values are well within the expected range, but that is unusual in terms of the combination of the full set of predictors.

To qualify an observation’s leverage, the leverage statistic can be computed.

A large leverage statistic indicates an observation with high leverage.

For simple linear regression, the leverage statistic can be computed as

The leverage statistic always falls between and and the average leverage is always equal to So, if an observation has a leverage statistic greatly exceeds then it may be evidence that the corresponding point has high leverage.

Hybrid Subset Selection: Hybrid subset selection methods add variables to the model sequentially, analogous to forward stepwise selection, but with each iteration they may also remove any variables that no longer offer any improvement to model fit.

Hybrid approaches try to better simulate best subset selection while maintaining the computational advantages of stepwise approaches.

Hyperplane: In a -dimensional space, a hyperplane is a flat affine subspace of dimension For example, in two dimensions, a hyperplane is a flat one-dimensional subspace, or in other words, a line. In three dimensions, a hyperplane is a plane.

The word affine indicates that the subspace need not pass through the origin.

A -dimensional hyperplane is defined as

which means that any for which the above hyperplane equation holds is a point on the hyperplane.

If doesn’t fall on the hyperplane, then it must fall on one side of the hyperplane or the other. As such, a hyperplane can be thought of as dividing a -dimensional space into two partitions. Which side of the hyperplane a point falls on can be computed by calculating the sign of the result of plugging the point into the hyperplane equation.

Hypothesis Testing: The process of applying the scientific method to produce, test, and iterate on theories. Typical steps include: making an initial assumption; collecting evidence (data); and deciding whether to accept or reject the initial assumption, based on the available evidence (data).

Input: The variables that contribute to the value of the response or dependent variable in a given function. Also known as predictors, independent variables, or features. Input variables may be qualitative or quantitative.

Indicator Variable: A derived variable taking on a mutually exclusive value of 0 or 1 based on an associated indicator function which typically returns 0 to indicate the absence of some property and 1 to indicate the presence of that property. Similar to and often used interchangeably with the term dummy variable, especially when dealing with classes with only two possible values. Indicator variables make it easy to mix qualitative and quantitative predictors.

An example indicator variable encoding:

Interaction Term: A derived predictor that gets its value from computing the product of two associated predictors with the goal of better capturing the interaction between the two given predictors.

A simple linear regression model account for interaction between the predictors would look like

where can be interpreted as the increase in effectiveness of given a one-unit increase in and vice-versa.

It is sometimes possible for an interaction term to have a very small p-value while the associated main effects, , do not. Even in such a scenario the main effects should still be included in the model due to the hierarchical principle.

Intercept: In a linear model, the value of the dependent variable, when the independent variable, is equal to zero. Also described as the point at which a given line intersects with the x-axis.

Inner Node: One of the many points in a decision tree where the predictor space is split. Also known as inner node, or inode for short, or branch node.

Irreducible Error: A random error term that is independent of the independent variables with a mean roughly equal to zero that is intended to account for inaccuracies in a function which may arise from unmeasured variables or unmeasured variation. The irreducible error will always enforce an upper bound on the accuracy of attempts to predict the dependent variable.

K-Fold Cross Validation: A resampling method that operates by randomly dividing the set of observations into groups or folds of roughly equal size. Similar to leave-one-out cross validation, each of the folds is used as the validation set while the other folds are used as the test set to generate estimates of the test error. The K-fold cross validation estimated test error comes from the average of these estimates.

It can be shown that leave-one-out cross validation is a special case of K-fold cross validation where

Typical values for are 5 or 10 since these values require less computation than when is equal to

There is a bias-variance trade-off inherent to the choice of in K-fold cross validation. Typically, values of or are used as these values have been empirically shown to produce test error rate estimates that suffer from neither excessively high bias nor very high variance.

In terms of bias, leave-one-out cross validation is preferable to K-fold cross validation and K-fold cross validation is preferable to the validation set approach.

In terms of variance, K-fold cross validation where is preferable to leave-one-out cross validation and leave-one-out cross validation is preferable to the validation set approach.

K-Means Clustering: A method of cluster analysis that aims to partition a data set into distinct, non-overlapping clusters, where is stipulated in advance.

The K-means clustering procedure is built on a few constraints. Given sets containing the indices of the observations in each cluster, these sets must satisfy two properties:

- Each observation belongs to at least one of the clusters:

- No observation belongs to more than one cluster. Clusters are non-overlapping.

In the context of K-means clustering, a good cluster is one for which the within-cluster variation is as small as possible. For a cluster the within-cluster variation, is a measure of the amount by which the observations in a cluster differ from each other. As such, an ideal cluster would minimize

Informally, this means that the observations should be partitioned into clusters such that the total within-cluster variation, summed over all clusters, is as small as possible.

In order to solve this optimization problem, it is first necessary to define the means by which within-cluster variation will be evaluated. There are many ways to evaluate within-cluster variation, but the most common choice tends to be squared Euclidean distance, defined as

where denotes the number of observations in the kth cluster.

Combined with the abstract optimization problem outlined earlier yields

Finding the optimal solution to this problem is computationally infeasible unless and are very small, since there are almost ways to partition observations into clusters. However, a simple algorithm exists to find a local optimum.

K-means clustering gets its name from the fact that the cluster centroids are computed as means of the observations assigned to each cluster.

K-Nearest Neighbors Classifier: A classifier that takes a positive integer and first identifies the points that are nearest to represented by It next estimates the conditional probability for class based on the fraction of points in that have a response equal to Formally, the estimated conditional probability can be stated as

The K-Nearest Neighbor classifier then applies Bayes theorem and yields the classification with the highest probability.

Despite its simplicity, the K-Nearest Neighbor classifier often yields results that are surprisingly close to the optimal Bayes classifier.

The choice of can have a drastic effect on the yielded classifier, as controls the bias-variance trade-off for the classifier.

K-Nearest Neighbors Regression: A non-parametric method akin to linear regression.

Given a value for and a prediction point , k-nearest neighbors regression first identifies the observations that are closest to , represented by is then estimated using the average of like so

In higher dimensions, K-nearest neighbors regression often performs worse than linear regression. This is often due to combining too small an with too large a , resulting in a given observation having no nearby neighbors. This is often called the curse of dimensionality.

Knot: For regression splines, one of the points at which the coefficients utilized by the underlying function are changed to better model the respective region.

There are a variety of methods for choosing the number and location of the knots. Because the regression spline is most flexible in regions that contain a lot of knots, one option is to place more knots where the function might vary the most and fewer knots where the function might be more stable. Another common practice is to place the knots in a uniform fashion. One means of doing this is to choose the desired degrees of freedom and then use software or other heuristics to place the corresponding number of knots at uniform quantiles of the data.

Cross validation is a useful mechanism for determining the appropriate number of knots and/or degrees of freedom.

norm: The norm of a vector is defined as

norm: The norm of a vector is defined as

The norm measures the distance of the vector, from zero.

Lasso: A more recent alternative to ridge regression that allows for excluding some variables.

Coefficient estimates for the lasso are generated by minimizing the quantity

The main difference between ridge regression and the lasso is the change in penalty. Instead of the term of ridge regression, the lasso uses the norm of the coefficient vector as its penalty term. The norm of a coefficient vector is given by

The penalty can force some coefficient estimates to zero when the tuning parameter is sufficiently large. This means that like subset methods, the lasso performs variable selection. This results in models generated from the lasso tending to be easier to interpret the models formulated with ridge regression. These models are sometimes called sparse models since they include only a subset of the variables.

The variable selection of the lasso can be considered a kind of soft thresholding. The lasso will perform better in scenarios where not all of the predictors are related to the response, or where some number of variables are only weakly associated with the response.

Least Squares Line: The line yielded by least squares regression,

where the coefficients and are approximations of the unknown coefficients of the population regression line, and

Leave-One-Out Cross Validation: A resampling method similar to the validation set approach, except instead of splitting the observations evenly, leave-one-out cross-validation withholds only a single observation for the validation set. This process can be repeated times with each observation being withheld once. This yields mean squared errors which can be averaged together to yield the leave-one-out cross-validation estimate of the test mean squared error.

Leave-one-out cross validation has much less bias than the validation set approach. Leave-one-out cross validation also tends not to overestimate the test mean squared error since many more observations are used for training. In addition, leave-one-out cross validation is much less variable, in fact, it always yields the same result since there’s no randomness in the set splits.

Leave-one-out cross validation can be expensive to implement since the model has to be fit times. This can be especially expensive in situations where is very large and/or when each individual model is slow to fit.

In the classification setting, the leave-one-out cross validation error rate takes the form

where The K-fold cross validation error rate and the validation set error rate are defined similarly.

Likelihood Function: A function often used to estimate parameters from a set of independent observations. For logistic regression a common likelihood function takes the form

Estimates for and are chosen so as to maximize this likelihood function for logistic regression.

Linear Discriminant Analysis: While logistic regression models the conditional distribution of the response given the predictor(s) linear discriminant analysis takes the approach of modeling the distribution of the predictor(s) separately in each of the response classes , , and then uses Bayes’ theorem to invert these probabilities to estimate the conditional distribution.

Linear discriminant analysis is popular when there are more than two response classes. Beyond its popularity, linear discriminant analysis also benefits from not being susceptible to some of the problems that logistic regression suffers from:

- The parameter estimates for logistic regression can be surprisingly unstable when the response classes are well separated. Linear discriminant analysis does not suffer from this problem.

- Logistic regression is more unstable than linear discriminant analysis when is small and the distribution of the predictors is approximately normal in each of the response classes.

Linkage: A measure of the dissimilarity between two groups of observations.

There are four common types of linkage: complete, average, single, and centroid. Average, complete and single linkage are most popular among statisticians. Centroid linkage is often used in genomics. Average and complete linkage tend to be preferred because they tend to yield more balanced dendrograms. Centroid linkage suffers from a major drawback in that an inversion can occur where two clusters fuse at a height below either of the individual clusters in the dendrogram.

Complete linkage uses the maximal inter-cluster dissimilarity, calculated by computing all of the pairwise dissimilarities between observations in cluster A and observations in cluster B and taking the largest of those dissimilarities.

Single linkage uses the minimal inter-cluster dissimilarity given by computing all the pairwise dissimilarities between observations in clusters A and B and taking the smallest of those dissimilarities. Single linkage can result in extended trailing clusters where each observation fuses one-at-a-time.

Average linkage uses the mean inter-cluster dissimilarity given by computing all pairwise dissimilarities between the observations in cluster A and the observations in cluster B and taking the average of those dissimilarities.

Centroid linkage computes the dissimilarity between the centroid for cluster and A and the centroid for cluster B.

Local Regression: An approach to fitting flexible non-linear functions which involves computing the fit at a target point using only the nearby training observations.

Each new point from which a local regression fit is calculated requires fitting a new weighted least squares regression model by minimizing the appropriate regression weighting function for a new set of weights.

Log-Odds: Taking a logarithm of both sides of the logistic odds equation yields an equation for the log-odds or logit,

Logistic regression has log-odds that are linear in terms of

The log-odds equation for multiple logistic regression can be expressed as

Logistic Function: A function with a common “S” shaped Sigmoid curve guaranteed to return a value between and . For logistic regression, the logistic function takes the form

Logistic Regression: A regression model where the dependent variable is categorical or qualitative. Logistic regression models the probability that belongs to a particular category rather than modeling the response itself. Logistic regression uses a logistic function to ensure a prediction between and

Logit: Taking a logarithm of both sides of the logistic odds equation yields an equation for the log-odds or logit.

Logistic regression has log-odds that are linear in terms of

Maximal Margin Classifier: A classifier that uses the maximal margin hyperplane to classify test observations. The maximal margin hyperplane (also known as the optimal separating hyperplane) is the separating hyperplane which has the farthest minimum distance, or margin, from the training observations in terms of perpendicular distance.

The maximal margin classifier classifies a test observation based on the sign of

where are the coefficients of the maximal margin hyperplane.

The maximal margin hyperplane represents the mid-line of the widest gap between the two classes.

If no separating hyperplane exists, no maximal margin hyperplane exists either. However, a soft margin can be used to construct a hyperplane that almost separates the classes. This generalization of the maximal margin classifier is known as the support vector classifier.

Maximum Likelihood: A strategy utilized by logistic regression to estimate regression coefficients.

Maximum likelihood plays out like so: determine estimates for and such that the predicted probability of corresponds with the observed classes as closely as possible. Formally, this yield an equation called a likelihood function:

Estimates for and are chosen so as to maximize this likelihood function.

Linear regression’s least squares approach is actually a special case of maximum likelihood.

Maximum likelihood is also used to estimate in the case of multiple logistic regression.

Mean Squared Error: A statistical method for assessing how well the responses predicted by a modeled function correspond to the actual observed responses. Mean squared error is calculated by calculating the average squared difference between the predicted responses and their relative observed responses, formally,

Mean squared error will be small when the predicted responses are close to the true responses and large if there’s a substantial difference between the predicted response and the observed response for some observations.

Mixed Selection: A variable selection method that begins with a null model, like forward selection, repeatedly adding whichever predictor yields the best fit. As more predictors are added, the p-values become larger. When this happens, if the p-value for one of the variables exceeds a certain threshold, that variable is removed from the model. The selection process continues in this forward and backward manner until all the variables in the model have sufficiently low p-values and all the predictors excluded from the model would result in a high p-value if added to the model.

Model Assessment: The process of evaluating the performance of a given model.

Model Selection: The process of selecting the appropriate level of flexibility for a given model.

Multicollinearity: The situation in which collinearity exists between three or more variables even if no pair of variables have high correlation.

Multicollinearity can be detected by computing the [variance inflation factor][variance-inflation-factor].

Multiple Linear Regression: An extension of simple linear regression that accommodates multiple predictors.

The multiple linear regression model takes the form of

represents the predictor and represents the average effect of a one-unit increase in on , holding all other predictors fixed.

Multiple Logistic Regression: An extension of simple logistic regression that accommodates multiple predictors. Multiple logistic regression can be generalized as

where are predictors.

Multivariate Gaussian Distribution: A generalization of the one-dimensional Gaussian distribution that assumes that each predictor follows a one-dimensional normal distribution with some correlation between the predictors. The more correlation between predictors, the more the bell shape of the normal distribution will be distorted.

A p-dimensional variable X can be indicated to have a multivariate Gaussian distribution with the notation where is the mean of (a vector with p components) and is the p x p covariance matrix of .

Multivariate Gaussian density is formally defined as

Natural Spline: A regression spline with additional boundary constraints that force the function to be linear in the boundary region.

Non-Parametric: Not involving any assumptions about the form or parameters of a function being modeled.

Non-Parametric Methods: A class of techniques that don’t make explicit assumptions about the shape of the function being modeled and instead seek to estimate the function by getting as close to the training data points as possible without being too coarse or granular, preferring smoothness instead. Non-parametric methods can fit a wider range of possible functions since essentially no assumptions are made about the form of the function being modeled.

Normal Distribution: A theoretical frequency distribution represented by a normal curve or bell curve. Also known as a Gaussian distribution.

Null Hypothesis: The most common hypothesis test involves testing the null hypothesis that states

: There is no relationship between and

versus the alternative hypothesis

: Thee is some relationship between and

In mathematical terms, the null hypothesis corresponds to testing if , which reduces to

which evidences that is not related to

To test the null hypothesis, it is necessary to determine whether the estimate of , , is sufficiently far from zero to provide confidence that is non-zero.

Null Model: In linear regression, a model that includes an intercept, but no predictors.

Odds: The logistic function can be rebalanced to yield

is known as the odds and takes on a value between and infinity.

As an example, a probability of 1 in 5 yields odds of since

Outlier: A point for which is far from the value predicted by the model.

Excluding outliers can result in improved residual standard error and improved values, usually with negligible impact to the least squares fit.

Residual plots can help identify outliers, though it can be difficult to know how big a residual needs to be before considering a point an outlier. To address this, it can be useful to plot the studentized residuals instead of the normal residuals. Observations whose studentized residual is greater than are possible outliers.

Outliers should only be removed when confident that the outliers are due to a recording or data collection error since outliers may otherwise indicate a missing predictor or other deficiency in the model.

One-Standard-Error Rule: The one-standard-error rule advises that when many models have low estimated test error and it’s difficult or variable as to which model has the lowest test error, one should select the model with the fewest variables that is within one standard error of the lowest estimated test error. The rationale being that given a set of more or less equally good models, it’s often better to pick the simpler model.

One-Versus-All: Assuming [one-versus-all][glossary-one-versus-all] fits SVMs, each time comparing one of the classes to the remaining classes. Assuming a test observation and coefficients , resulting from fitting an SVM comparing the kth class (coded as ) to the others (coded as ), the test observation is assigned to the class for which

is largest, as this amounts to the highest level of confidence.

One-Versus-One: Assuming [one-versus-one][glossary-one-versus-one], or all-pairs, constructs SVMs, each of which compares a pair of classes. A test observation would be classified using each of the classifiers, with the final classification given by the class most frequently predicted by the classifiers.

Output: The result of computing a given function with all of the independent variables replaced with concrete values. Also known as the response or dependent variable. Output may be qualitative or quantitative.

Overfitting: A phenomenon where a model closely matches the training data such that it captures too much of the noise or error in the data. This results in a model that fits the training data very well, but doesn’t make good predictions under test or in general. Overfitting refers specifically to scenarios in which a less flexible model would have yielded a smaller test mean squared error.

P-Value: When the null hypothesis is true, the probability for a given model that a statistical summary (such as a t-statistic) would be of equal or of greater magnitude compared to the actual observed results. Can indicate an association between the predictor and the response if the value is sufficiently small, further indicating that the null hypothesis may not be true.

Parameter: A number or symbol representing a number that is multiplied with a variable or an unknown quantity in an algebraic term.

Parametric: Relating to or expressed in terms of one or more parameters.

Parametric Methods: A class of techniques that make explicit assumptions about the shape of the function being modeled and seek to estimate the assumed function by estimating parameters or coefficients that yield a function that fits the training data points as closely as possible, within the constraints of the assumed functional form. Parametric methods tend to have higher bias since they make assumptions about the form of the function being modeled.

Partial Least Squares: A regression technique that first identifies a new set of features that are linear combinations of the original predictors and then uses these new features to fit a linear model using least squares.

Unlike principal component regression, partial least squares makes use of the response to identify new features that not only approximate the original predictors well, but that are also related to the response.

In practice, partial least squares often performs no better than principal component regression or ridge regression. Though the supervised dimension reduction of partial least squares can reduce bias, it also has the potential to increase variance. Because of this, the benefit of partial least squares compared to principal component regression is often negligible.

Polynomial Regression: An extension to the linear model intended to accommodate non-linear relationships and mitigate the effects of the linear assumption by incorporating polynomial functions of the predictors into the linear regression model.

For example, in a scenario where a quadratic relationship seems likely, the following model could be used

Population Regression Line: The line that describes the best linear approximation to the true relationship between and for the population.

Portion of Variance Explained: A means of determining how much of the variance in the data is not captured by the first principal components.

The total variance in the data set assuming the variables have been centered to have a mean of zero is defined by

The variance explained by the mth principal component is defined as

From these equations it can be seen that the portion of the variance explained for the mth principal component is given by

To compute the cumulative portion of variance explained by the first principal components, the individual portions should be summed. In total there are principal components and their portion of variance explained sums to one.

Posterior Probability: Taking into account the predictor value for a given observation, the probability that the observation belongs to the kth class of a qualitative variable that can take on distinct, unordered values. More formally,

Prediction Interval: A measure of confidence in the prediction of an individual response, Prediction intervals will always be wider than confidence intervals because they take into account the uncertainty associated with , the irreducible error.

Principal Component Analysis: A technique for reducing the dimension of an data matrix to derive a low-dimensional set of features from a large set of variables.

The first principal component direction of the data is the line along which the observations vary the most.

Put another way, the first principal component direction is the line such that if the observations were projected onto the line then the projected observations would have the largest possible variance and projecting observations onto any other line would yield projected observations with lower variance.

Another interpretation of principal component analysis describes the first principal component vector as the line that is as close as possible to the data. In other words, the first principal component line minimizes the sum of the squared perpendicular distances between each point and the line. This means that the first principal component is chosen such that the projected observations are as close as possible to the original observations.

Projecting a point onto a line simply involves finding the location on the line which is closest to the point.

As many as principal components can be computed.

Principal Component Regression: A regression method that first constructs the first principal components, and then uses the components as the predictors in a linear regression model that is fit with least squares.

The premise behind this approach is that a small number of principal components can often suffice to explain most of the variability in the data as well as the relationship between the predictors and the response. This relies on the assumption that the directions in which show the most variation are the directions that are associated with the predictor Though not always true, it is true often enough to approximate good results.

Prior Probability: The probability that a given observation is associated with the kth class of a qualitative variable that can take on distinct, unordered values.

Quadratic Discriminant Analysis: An alternative approach to linear discriminant analysis that makes most of the same assumptions, except that quadratic discriminant analysis assumes that each class has its own covariance matrix. This amounts to assuming that an observation from the kth class has a distribution of the form

where is a covariance matrix for class .

This yields a Bayes classifier that assigns an observation to the class with the largest value for

which is equivalent to

The quadratic discriminant analysis Bayes classifier gets its name from the fact that it is a quadratic function in terms of

Qualitative Value: A value expressed or expressible as a quality, typically limited to one of K different classes or categories.

Quantitative Value: A value expressed or expressible as a numerical quantity.

Statistic: A ratio capturing the proportion of variance explained as a value between and , independent of the unit of

To calculate the statistic, the following formula may be used

where RSS is the residual sum of squares,

and TSS is the total sum of squares,

An statistic close to indicates that a large portion of the variability in the response is explained by the model. An value near indicates that the model accounted for very little of the variability of the model.

An value near may occur because the type of model is wrong and/or because the inherent is high.

Random Forest: Random forests are similar to bagged trees, however, random forests introduce a randomized process that helps decorrelate trees.

During the random forest tree construction process, each time a split in a tree is considered, a random sample of predictors is chosen from the full set of predictors to be used as candidates for making the split. Only the randomly selected predictors can be considered for splitting the tree in that iteration. A fresh sample of predictors is considered at each split. Typically meaning that the number of predictors considered at each split is approximately equal to the square root of the total number of predictors, This means that at each split only a minority of the available predictors are considered. This process helps mitigate the strength of very strong predictors, allowing more variation in the bagged trees, which ultimately helps reduce correlation between trees and better reduces variance. In the presence of an overly strong predictor, bagging may not outperform a single tree. A random forest would tend to do better in such a scenario.

On average, of the splits in a random forest will not even consider the strong predictor which causes the resulting trees to be less correlated. This process is a kind of decorrelation process.

As with bagging, random forests will not overfit as is increased, so a value of should be selected that allows the error rate to settle down.

Recursive Binary Splitting: A top-down approach that begins at the top of a decision-tree where all the observations belong to a single region, and successively splits the predictor space into two new branches. Recursive binary splitting is greedy strategy because at each step in the process the best split is made relative to that particular step rather than looking ahead and picking a split that will result in a better split at some future step.

At each step the predictor and the cutpoint are selected such that splitting the predictor space into regions and leads to the greatest possible reduction in the residual sum of squares. This means that at each step, all the predictors and all possible values of the cutpoint for each of the predictors are considered. The optimal predictor and cutpoint are selected such that the resulting tree has the lowest residual sum of squares compared to the other candidate predictors and cutpoints.

More specifically, for any and that define the half planes

and

the goal is to find the and that minimize the equation

where and are the mean responses for the training observations in the respective regions.

Only one region is split each iteration. The process concludes when some halting criteria are met.

This process can result in overly complex trees that overfit the data leading to poor test performance. A smaller tree often leads to lower variance and better interpretation at the cost of a little bias.

Regression Problem: A class of problem that is well suited to statistical techniques for predicting the value of a dependent variable or response by modeling a function of one or more independent variables or predictors in the presence of an error term.

Regression Spline: A spline that is fit to data using a set of spline basis functions, typically fit using least squares.

Regression Tree: A decision tree produced in roughly two steps:

- Divide the predictor space, into distinct and non-overlapping regions,

- For every observation that falls into the region make the same prediction, which is the mean value of the response values for the training observations in

To determine the appropriate regions, it is preferable to divide the predictor space into high-dimensional rectangles, or boxes, for simplicity and ease of interpretation. Ideally the goal would be to find regions that minimize the residual sum of squares given by

where is the mean response for the training observations in the jth box. That said, it is computationally infeasible to consider every possible partition of the feature space into boxes. For this reason, a top-down, greedy approach known as recursive binary splitting is used.

Resampling Methods: Processes of repeatedly drawing samples from a data set and refitting a given model on each sample with the goal of learning more about the fitted model.

Resampling methods can be expensive since they require repeatedly performing the same statistical methods on different subsets of the data.

Residual: A quantity left over at the end of a process, a remainder or excess.

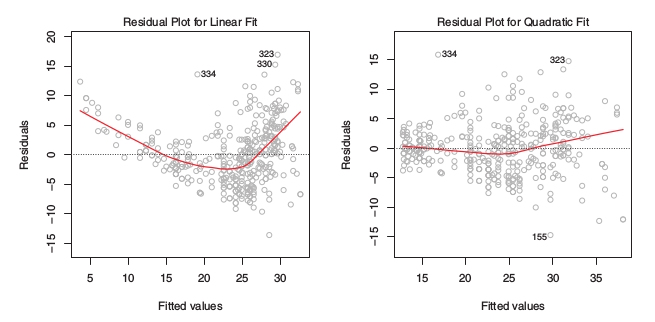

Residual Plot: A graphical tool useful for identifying non-linearity. For simple linear regression this consists of graphing the residuals, versus the predicted or fitted values of

If a residual plot indicates non-linearity in the model, then a simple approach is to use non-linear transformations of the predictors, such as , , or , in the regression model.

The example residual plots above suggest that a quadratic fit may be more appropriate for the model under scrutiny.

Residual Standard Error: An estimate of standard error derived from the residual sum of squares, calculated with the following formula

which simplifies to the following for simple linear regression

where is the residual sum of squares. Expanding yields the formula

In rough terms, the residual standard error is the average amount by which the response will deviate from the true regression line. This means that the residual standard error amounts to an estimate of the standard deviation of , the irreducible error. The residual standard error can also be viewed as a measure of the lack of fit of the model to the data.

Residual Sum of Squares: The sum of the residual square differences between the observed value and the predicted value.

Assuming the prediction of is described as

then the residual can be represented as

The residual sum of squares can then be described as

or

Ridge Regression: A shrinkage method very similar to least squares fitting except the coefficients are estimated by minimizing a modified quantity.

Recall that the least squares fitting procedure estimates the coefficients by minimizing the residual sum of squares where the residual sum of squares is given by

Ridge regression instead selects coefficients by selecting coefficients that minimize

where is a tuning parameter.

The second term, is a shrinkage penalty. In this case, the penalty is small when the coefficients are close to zero, but dependent on and how the coefficients grow. As the second term grows, it pushes the coefficient estimates closer to zero, thereby shrinking them.

The tuning parameter serves to control the balance of how the two terms affect coefficient estimates. When is zero, the second term is nullified, yielding estimates exactly matching those of least squares. As approaches infinity, the impact of the shrinkage penalty grows, pushing/shrinking the ridge regression coefficients closer and closer to zero.

Depending on the value of ridge regression will produce different sets of estimates, notated by for each value of

It’s worth noting that the ridge regression penalty is only applied to variable coefficients, not the intercept coefficient Recall that the goal is to shrink the impact of each variable on the response and as such, this shrinkage should not be applied to the intercept coefficient which is a measure of the mean value of the response when none of the variables are present.

An important difference between ridge regression and least squares regression is that least squares regression’s coefficient estimates are scale equivalent and ridge regression’s are not. Because of this, it is best to apply ridge regression after standardizing the predictors.

Compared to subset methods, ridge regression is at a disadvantage when it comes to number of predictors used since ridge regression will always use all predictors. Ridge regression will shrink predictor coefficients toward zero, but it will never set any of them to exactly zero (except when ). Though the extra predictors may not hurt prediction accuracy, they can make interpretability more difficult, especially when is large.

Ridge regression will tend to perform better when the response is a function of many predictors, all with coefficients roughly equal in size.

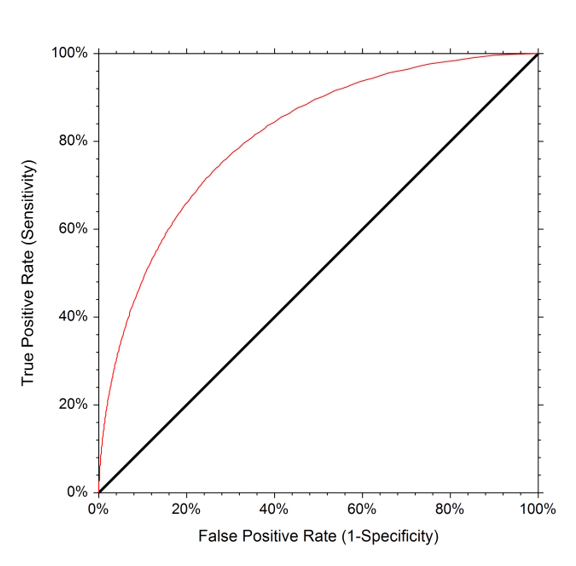

ROC Curve: A useful graphic for displaying specificity and sensitivity error rates for all possible posterior probability thresholds. ROC is a historic acronym that comes from communications theory and stands for receiver operating characteristics.

The overall performance of a classifier summarized over all possible thresholds is quantified by the area under the ROC curve.

A more ideal ROC curve will hold more tightly to the top left corner which, in turn, will increase the area under the ROC curve. A classifier that performs no better than chance will have an area under the ROC curve less than or equal to 0.5 when evaluated against a test data set.

Scale Equivalent: Typically refers to the relationship between coefficient estimates and predictor values, a system is said to be scale equivalent if the relationship between the coefficient estimates and the predictors is such that multiplying the predictors by a scalar results in a scaling of the coefficient estimates by the reciprocal of the scalar.

For example, when calculating least squares coefficient estimates, multiplying by a constant, leads to a scaling of the least squares coefficient estimates by a factor of Another way of looking at it is that regardless of how the jth predictor is scaled, the value of remains the same. In contrast, ridge regression coefficients can change dramatically when the scale of a given predictor is changed. This means that may depend on the scaling of other predictors. Because of this, it is best to apply ridge regression after standardizing the predictors.

Shrinkage Methods: An alternative strategy to subset selection that uses all the predictors, but employs a technique to constrain or regularize the coefficient estimates.

Constraining coefficient estimates can significantly reduce their variance. Two well known techniques of shrinking regression coefficients toward zero are ridge regression and the lasso.

Shrinkage Penalty: A penalty used in shrinkage methods to shrink the impact of each variable on the response.

Sensitivity: The percentage of observations correctly positively classified (true positives).

Simple Linear Regression: A model that predicts a quantitative response on the basis of a single predictor variable It assumes an approximately linear relationship between and Formally,

where represents the intercept or the value of when is equal to and represents the slope of the line or the average amount of change in for each one-unit increase in

Slope: In a linear model, the average amount of change in the dependent variable, for each one-unit increase in the dependent variable,

Smoothing Spline: An approach to producing a spline that utilizes a loss or penalty function to minimize the residual sum of squares while also ensuring the resulting spline is smooth. Commonly this results in a function that minimizes

where the term

is a loss function that encourages to be smooth and less variable and is a non-negative tuning parameter.

Specificity: The percentage of observations correctly negatively classified (true negatives).

Standard Error: Roughly, describes the average amount that an estimate, differs from the actual value of

Standard error can be useful for estimating the accuracy of a single estimated value, such as an average. To calculate the standard error of an estimated value , the following equation can be used:

where is the standard deviation of each the observed values.

The more observations, the larger , the smaller the standard error.

Standardized Values: A standardized value is the result of scaling a data point with regard to the population. More concretely, standardized values allow for putting multiple predictors on the same scale by normalizing each predictor relative to its estimated standard deviation. As a result, all the predictors will have a standard deviation of A formula for standardizing predictors is given by:

Step Function: A method of modeling non-linearity that splits the range of into bins and fits a different constant to each bin. This is equivalent to converting a continuous variable into an ordered categorical variable.

First, cut points, are created in the range of from which new variables are created.

where is an indicator function that returns 1 if the condition is true.

It is worth noting that each bin is unique and

since each variable only ends up in one of intervals.

Once the slices have been selected, a linear model is fit using as predictors:

Only one of can be non-zero. When all the predictors will be zero. This means can be interpreted as the mean value of for Similarly, for the linear model reduces to so represents the average increase in the response for in compared to

Unless there are natural breakpoints in the predictors, piecewise constant functions can miss the interesting data.

Studentized Residual: Because the standard deviation of residuals in a sample can vary greatly from one data point to another even when the errors all have the same standard deviation, it often does not make sense to compare residuals at different data points without first studentizing. Studentized residuals are computed by dividing each residual, , by its estimated standard error. Observations whose studentized residual is greater than are possible outliers.

Supervised Learning: The process of inferring or modeling a function from a set of training observations where each observation consists of one or more features or predictors paired with a response measurement and the response measurement is used to guide the process of generating a model that relates the predictors to the response with the goal of accurately predicting future observations or of better inferring the relationship between the predictors and the response.