Previous: Chapter 2 - Statistical Learning

Chapter 3 - Linear Regression

Simple Linear Regression

Simple linear regression predicts a quantitative response on the basis of a single predictor variable It assumes an approximately linear relationship between and Formally,

where represents the intercept or the value of when is equal to and represents the slope of the line or the average amount of change in for each one-unit increase in

Together, and are known as the model coefficients or parameters.

Estimating Model Coefficients

Since and are typically unknown, it is first necessary to estimate the coefficients before making predictions. To estimate the coefficients, it is desirable to choose values for and such that the resulting line is as close as possible to the observed data points.

There are many ways of measuring closeness. The most common method strives to minimizes the sum of the residual square differences between the observed value and the predicted value.

Assuming the prediction of is described as

then the residual can be represented as

The residual sum of squares can then be described as

or

Assuming sample means of

and

calculus can be applied to estimate the least squares coefficient estimates for linear regression to minimize the residual sum of squares like so

Assessing Coefficient Estimate Accuracy

Simple linear regression represents the relationship between and as

where is the intercept term, or the value of when ; is the slope, or average increase in associated with a one-unit increase in ; and is the error term which acts as a catchall for what is missed by the simple model given that the true relationship likely isn’t linear, there may be other variables that affect , and/or there may be error in the observed measurements. The error term is typically assumed to be independent of

The model used by simple linear regression defines the population regression line, which describes the best linear approximation to the true relationship between and for the population.

The coefficient estimates yielded by least squares regression characterize the least squares line,

The difference between the population regression line and the least squares line is similar to the difference that emerges when using a sample to estimate the characteristics of a large population.

In linear regression, the unknown coefficients, and define the population regression line, whereas the estimates of those coefficients, and define the least squares line.

Though the parameter estimates for a given sample may overestimate or underestimate the value of a particular parameter, an unbiased estimator does not systemically overestimate or underestimate the true parameter.

This means that using an unbiased estimator and a large number of data sets, the values of the coefficients and could be determined by averaging the coefficient estimates from each of those data sets.

To estimate the accuracy of a single estimated value, such as an average, it can be helpful to calculate the standard error of the estimated value , which can be accomplished like so

where is the standard deviation of each

Roughly, the standard error describes the average amount that the estimate differs from

The more observations, the larger , the smaller the standard error.

To compute the standard errors associated with and , the following formulas can be used:

and

where and is not correlated with

generally isn’t known, but can be estimated from the data. The estimate of is known as the residual standard error and can be calculated with the following formula

where is the residual sum of squares.

Standard errors can be used to compute confidence intervals and prediction intervals.

A confidence interval is defined as a range of values such that there’s a certain likelihood that the range will contain the true unknown value of the parameter.

For simple linear regression the 95% confidence interval for can be approximated by

Similarly, a confidence interval for can be approximated as

The accuracy of an estimated prediction depends on whether we wish to predict an individual response, , or the average response,

When predicting an individual response, , a prediction interval is used.

When predicting an average response, , a confidence interval is used.

Prediction intervals will always be wider than confidence intervals because they take into account the uncertainty associated with , the irreducible error.

The standard error can also be used to perform hypothesis testing on the estimated coefficients.

The most common hypothesis test involves testing the null hypothesis that states

: There is no relationship between and

versus the alternative hypothesis

: Thee is some relationship between and

In mathematical terms, the null hypothesis corresponds to testing if , which reduces to

which evidences that is not related to

To test the null hypothesis, it is necessary to determine whether the estimate of , , is sufficiently far from zero to provide confidence that is non-zero.

How close is close enough depends on When is small, then small values of may provide strong evidence that is not zero. Conversely, if is large, then will need to be large in order to reject the null hypothesis.

In practice, computing a T-statistic, which measures the number of standard deviations that , is away from , is useful for determining if an estimate is sufficiently significant to reject the null hypothesis.

A T-statistic can be computed as follows

If there is no relationship between and , it is expected that a t-distribution with degrees of freedom should be yielded.

With such a distribution, it is possible to calculate the probability of observing a value of or larger assuming that This probability, called the p-value, can indicate an association between the predictor and the response if sufficiently small.

Assessing Model Accuracy

Once the null hypothesis has been rejected, it may be desirable to quantify to what extent the model fits the data. The quality of a linear regression model is typically assessed using residual standard error (RSE) and the statistic statistic.

The residual standard error is an estimate of the standard deviation of , the irreducible error.

In rough terms, the residual standard error is the average amount by which the response will deviate from the true regression line.

For linear regression, the residual standard error can be computed as

The residual standard error is a measure of the lack of fit of the model to the data. When the values of , the RSE will be small and the model will fit the data well. Conversely, if for some values, the RSE may be large, indicating that the model doesn’t fit the data well.

The RSE provides an absolute measure of the lack of fit of the model in the units of This can make it difficult to know what constitutes a good RSE value.

The statistic is an alternative measure of fit that takes the form of a proportion. The statistic captures the proportion of variance explained as a value between and , independent of the unit of

To calculate the statistic, the following formula may be used

where

and

The total sum of squares, TSS, measures the total variance in the response The TSS can be thought of as the total variability in the response before applying linear regression. Conversely, the residual sum of squares, RSS, measures the amount of variability left after performing the regression.

Ergo, measures the amount of variability in the response that is explained by the model. measures the proportion of variability in that can be explained by An statistic close to indicates that a large portion of the variability in the response is explained by the model. An value near indicates that the model accounted for very little of the variability of the model.

An value near may occur because the linear model is wrong and/or because the inherent is high.

has an advantage over RSE since it will always yield a value between and , but it can still be tough to know what a good value is. Frequently, what constitutes a good value depends on the application and what is known about the problem.

The statistic is a measure of the linear relationship between and

Correlation is another measure of the linear relationship between and Correlation of can be calculated as

This suggests that could be used instead of to assess the fit of the linear model, however for simple linear regression it can be shown that More concisely, for simple linear regression, the squared correlation and the statistic are equivalent. Though this is the case for simple linear regression, correlation does not extend to multiple linear regression since correlation quantifies the association between a single pair of variables. The statistic can, however, be applied to multiple regression.

Multiple Regression

The multiple linear regression model takes the form of

Multiple linear regression extends simple linear regression to accommodate multiple predictors.

represents the predictor and represents the average effect of a one-unit increase in on , holding all other predictors fixed.

Estimating Multiple Regression Coefficients

Because the coefficients are unknown, it is necessary to estimate their values. Given estimates of , estimates can be made using the formula below

The parameters can be estimated using the same least squares strategy as was employed for simple linear regression. Values are chosen for the parameters such that the residual sum of squares is minimized

Estimating the values of these parameters is best achieved with matrix algebra.

Assessing Multiple Regression Coefficient Accuracy

Once estimates have been derived, it is next appropriate to test the null hypothesis

versus the alternative hypothesis

The F-statistic can be used to determine which hypothesis holds true.

The F-statistic can be computed as

where, again,

and

If the assumptions of the linear model, represented by the alternative hypothesis, are true it can be shown that

Conversely, if the null hypothesis is true, it can be shown that

This means that when there is no relationship between the response and the predictors the F-statisitic takes on a value close to

Conversely, if the alternative hypothesis is true, then the F-statistic will take on a value greater than

When is large, an F-statistic only slightly greater than may provide evidence against the null hypothesis. If is small, a large F-statistic is needed to reject the null hypothesis.

When the null hypothesis is true and the errors have a normal distribution, the F-statistic follows and F-distribution. Using the F-distribution, it is possible to figure out a p-value for the given , , and F-statistic. Based on the obtained p-value, the validity of the null hypothesis can be determined.

It is sometimes desirable to test that a particular subset of coefficients are This equates to a null hypothesis of

Supposing that the residual sum of squares for such a model is then the F-statistic could be calculated as

Even in the presence of p-values for each individual variable, it is still important to consider the overall F-statistic because there is a reasonably high likelihood that a variable with a small p-value will occur just by chance, even in the absence of any true association between the predictors and the response.

In contrast, the F-statistic does not suffer from this problem because it adjusts for the number of predictors. The F-statistic is not infallible and when the null hypothesis is true the F-statistic can still result in p-values below about 5% of the time regardless of the number of predictors or the number of observations.

The F-statistic works best when is relatively small or when is relatively small compared to

When is greater than , multiple linear regression using least squares will not work, and similarly, the F-statistic cannot be used either.

Selecting Important Variables

Once it has been established that at least one of the predictors is associated with the response, the question remains, which of the predictors is related to the response? The process of removing extraneous predictors that don’t relate to the response is called variable selection.

Ideally, the process of variable selection would involve testing many different models, each with a different subset of the predictors, then selecting the best model of the bunch, with the meaning of “best” being derived from various statistical methods.

Regrettably, there are a total of models that contain subsets of predictors. Because of this, an efficient and automated means of choosing a smaller subset of models is needed. There are a number of statistical approaches to limiting the range of possible models.

Forward selection begins with a null model, a model that has an intercept but no predictors, and attempts simple linear regressions, keeping whichever predictor results in the lowest residual sum of squares. In this fashion, the predictor yielding the lowest RSS is added to the model one-by-one until some halting condition is met. Forward selection is a greedy process and it may include extraneous variables.

Backward selection begins with a model that includes all the predictors and proceeds by removing the variable with the highest p-value each iteration until some stopping condition is met. Backwards selection cannot be used when

Mixed selection begins with a null model, like forward selection, repeatedly adding whichever predictor yields the best fit. As more predictors are added, the p-values become larger. When this happens, if the p-value for one of the variables exceeds a certain threshold, that variable is removed from the model. The selection process continues in this forward and backward manner until all the variables in the model have sufficiently low p-values and all the predictors excluded from the model would result in a high p-value if added to the model.

Assessing Multiple Regression Model Fit

While in simple linear regression the , the fraction of variance explained, is equal to in multiple linear regression, is equal to In other words, is equal to the square of the correlation between the response and the fitted linear model. In fact, the fitted linear model maximizes this correlation among all possible linear models.

An close to indicates that the model explains a large portion of the variance in the response variable. However, it should be noted that will always increase when more variables are added to the model, even when those variables are only weakly related to the response. This happens because adding another variable to the least squares equation will always yield a closer fit to the training data, though it won’t necessarily yield a closer fit to the test data.

Residual standard error, RSE, can also be used to assess the fit of a multiple linear regression model. In general, RSE can be calculated as

which simplifies to the following for simple linear regression

Given the definition of RSE for multiple linear regression, it can be seen that models with more variables can have a higher RSE if the decrease in RSS is small relative to the increase in

In addition to and RSE, it can also be useful to plot the data to verify the model.

Once coefficients have been estimated, making predictions is a simple as plugging the coefficients and predictor values into the multiple linear model

However, it should be noted that these predictions will be subject to three types of uncertainty.

-

The coefficient estimates, are only estimates of the actual coefficients That is to say, the least squares plane is only an estimate of the true population regression plane. The error introduced by this inaccuracy is reducible error and a confidence interval can be computed to determine how close is to

-

Assuming a linear model for is almost always an approximation of reality, which means additional reducible error is introduced due to model bias. A linear model often models the best linear approximation of the true, non-linear surface.

-

Even in the case where and the true values of the coefficients, are known, the response value cannot be predicted exactly because of the random, irreducible error , in the model. How much will tend to vary from can be determined using prediction intervals.

Prediction intervals will always be wider than confidence intervals because they incorporate both the error in the estimate of , the reducible error, and the variation in how each point differs from the population regression plane, the irreducible error.

Qualitative predictors

Linear regression can also accommodate qualitative variables.

When a qualitative predictor or factor has only two possible values or levels, it can be incorporated into the model my introducing an indicator variable or dummy variable that takes on only two numerical values.

For example, using a coding like

yields a regression equation like

Given such a coding, represents the average difference in between classes A and B.

Alternatively, a dummy variable like the following could be used

which results in a regression model like

In which case, represents the overall average and is the amount class A is above the average and class B below the average.

Regardless of the coding scheme, the predictions will be equivalent. The only difference is the way the coefficients are interpreted.

When a qualitative predictor takes on more than two values, a single dummy variable cannot represent all possible values. Instead, multiple dummy variables can be used. The number of variables required will always be one less than the number of values that the predictor can take on.

For example, with a predictor that can take on three values, the following coding could be used

With such a coding, can be interpreted as the average response for class C. can be interpreted as the average difference in response between classes A and C. Finally, can be interpreted as the average difference in response between classes B and C.

The case where and are both zero, the level with no dummy variable, is known as the baseline.

Using dummy variables allows for easily mixing quantitative and qualitative predictors.

There are many ways to encode dummy variables. Each approach yields equivalent model fits, but results in different coefficients and different interpretations that highlight different contrasts.

Extending the Linear Model

Though linear regression provides interpretable results, it makes several highly restrictive assumptions that are often violated in practice. One assumption is that the relationship between the predictors and the response is additive. Another assumption is that the relationship between the predictors and the response is linear.

The additive assumption implies that the effect of changes in a predictor on the response is independent of the values of the other predictors.

The linear assumption implies that the change in the response due to a one-unit change in is constant regardless of the value of

The additive assumption ignores the possibility of an interaction between predictors. One way to account for an interaction effect is to include an additional predictor, called an interaction term, that computes the product of the associated predictors.

Modeling Predictor Interaction

A simple linear regression model account for interaction between the predictors would look like

can be interpreted as the increase in effectiveness of given a one-unit increase in and vice-versa.

It is sometimes possible for an interaction term to have a very small p-value while the associated main effects, , do not. Even in such a scenario the main effects should still be included in the model due to the hierarchical principle.

The hierarchical principle states that, when an interaction term is included in the model, the main effects should also be included, even if the p-values associated with their coefficients are not significant. The reason for this is that is often correlated with and and removing them tends to change the meaning of the interaction.

If is related to the response, then whether or not the coefficient estimates of or are exactly zero is of limited interest.

Interaction terms can also model a relationship between a quantitative predictor and a qualitative predictor.

In the case of simple linear regression with a qualitative variable and without an interaction term, the model takes the form

with the addition of an interaction term, the model takes the form

which is equivalent to

Modeling Non-Linear Relationships

To mitigate the effects of the linear assumption it is possible to accommodate non-linear relationships by incorporating polynomial functions of the predictors in the regression model.

For example, in a scenario where a quadratic relationship seems likely, the following model could be used

This extension of the linear model to accommodate non-linear relationships is called polynomial regression.

Common Problems with Linear Regression

- Non-linearity of the response-predictor relationship

- Correlation of error terms

- Non-constant variance of error terms

- Outliers

- High-leverage points

- Collinearity

1. Non-linearity of the response-predictor relationship

If the true relationship between the response and predictors is far from linear, then virtually all conclusions that can be drawn from the model are suspect and prediction accuracy can be significantly reduced.

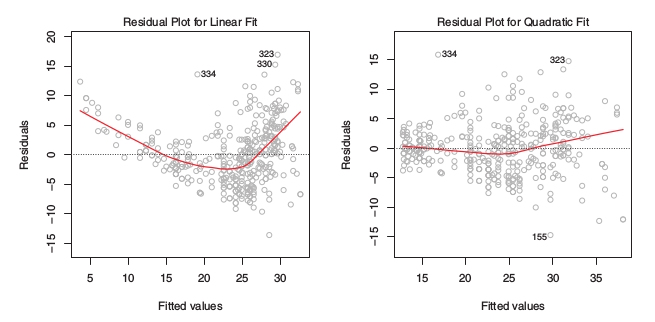

Residual plots are a useful graphical tool for identifying non-linearity. For simple linear regression this consists of graphing the residuals, versus the predicted or fitted values of

If a residual plot indicates non-linearity in the model, then a simple approach is to use non-linear transformations of the predictors, such as , , or , in the regression model.

The example residual plots above suggest that a quadratic fit may be more appropriate for the model under scrutiny.

2. Correlation of error terms

An important assumption of linear regression is that the error terms, , are uncorrelated. Because the estimated regression coefficients are calculated based on the assumption that the error terms are uncorrelated, if the error terms are correlated it will result in incorrect standard error values that will tend to underestimate the true standard error. This will result in prediction intervals and confidence intervals that are narrower than they should be. In addition, p-values associated with the model will be lower than they should be. In other words, correlated error terms can make a model appear to be stronger than it really is.

Correlations in error terms can be the result of time series data, unexpected observation relationships, and other environmental factors. Observations that are obtained at adjacent time points will often have positively correlated errors. Good experiment design is also a crucial factor in limiting correlated error terms.

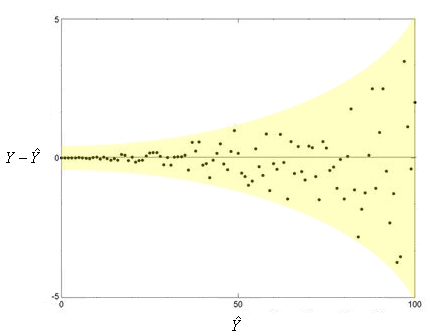

3. Non-constant variance of error terms

Linear regression also assumes that the error terms have a constant variance,

Standard errors, confidence intervals, and hypothesis testing all depend on this assumption.

Residual plots can help identify non-constant variances in the error, or heteroscedasticity, if a funnel shape is present.

One way to address this problem is to transform the response using a concave function such as or This results in a greater amount of shrinkage of the larger responses, leading to a reduction in heteroscedasticity.

4. Outliers

An outlier is a point for which is far from the value predicted by the model.

Excluding outliers can result in improved residual standard error and improved values, usually with negligible impact to the least squares fit.

Residual plots can help identify outliers, though it can be difficult to know how big a residual needs to be before considering a point an outlier. To address this, it can be useful to plot the studentized residuals instead of the normal residuals. Studentized residuals are computed by dividing each residual, , by its estimated standard error. Observations whose studentized residual is greater than are possible outliers.

Outliers should only be removed when confident that the outliers are due to a recording or data collection error since outliers may otherwise indicate a missing predictor or other deficiency in the model.

5. High-Leverage Points

While outliers relate to observations for which the response is unusual given the predictor , in contrast, observations with high leverage are those that have an unusual value for the predictor for the given response

High leverage observations tend to have a sizable impact on the estimated regression line and as a result, removing them can yield improvements in model fit.

For simple linear regression, high leverage observations can be identified as those for which the predictor value is outside the normal range. With multiple regression, it is possible to have an observation for which each individual predictor’s values are well within the expected range, but that is unusual in terms of the combination of the full set of predictors.

To qualify an observation’s leverage, the leverage statistic can be computed.

A large leverage statistic indicates an observation with high leverage.

For simple linear regression, the leverage statistic can be computed as

The leverage statistic always falls between and and the average leverage is always equal to So, if an observation has a leverage statistic greatly exceeds then it may be evidence that the corresponding point has high leverage.

6. Collinearity

Collinearity refers to the situation in which two or more predictor variables are closely related to one another.

Collinearity can pose problems for linear regression because it can make it hard to determine the individual impact of collinear predictors on the response.

Collinearity reduces the accuracy of the regression coefficient estimates, which in turn causes the standard error of to grow. Since the T-statistic for each predictor is calculated by dividing by its standard error, collinearity results in a decline in the true T-statistic. This may cause it to appear that and are related to the response when they are not. As such, collinearity reduces the effectiveness of the null hypothesis. Because of all this, it is important to address possible collinearity problems when fitting the model.

One way to detect collinearity is to generate a correlation matrix of the predictors. Any element in the matrix with a large absolute value indicates highly correlated predictors. This is not always sufficient though, as it is possible for collinearity to exist between three or more variables even if no pair of variables have high correlation. This scenario is known as multicollinearity.

Multicollinearity can be detected by computing the variance inflation factor. The variance inflation factor is the ratio of the variance of when fitting the full model divided by the variance of if fit on its own. The smallest possible VIF value is , which indicates no collinearity whatsoever. In practice, there is typically a small amount of collinearity among predictors. As a general rule of thumb, VIF values that exceed 5 or 10 indicate a problematic amount of collinearity.

The variance inflation factor for each variable can be computed using the formula

where is the from a regression of onto all of the other predictors. If is close to one, the VIF will be large and collinearity is present.

One way to handle collinearity is to drop one of the problematic variables. This usually doesn’t compromise the fit of the regression as the collinearity implies that the information that the predictor provides about the response is abundant.

A second means of handling collinearity is to combine the collinear predictors together into a single predictor by some kind of transformation such as an average.

Parametric Methods Versus Non-Parametric Methods

A non-parametric method akin to linear regression is k-nearest neighbors regression which is closely related to the k-nearest neighbors classifier.

Given a value for and a prediction point , k-nearest neighbors regression first identifies the observations that are closest to , represented by is then estimated using the average of like so

A parametric approach will outperform a non-parametric approach if the parametric form is close to the true form of

The choice of a parametric approach versus a non-parametric approach will depend largely on the bias-variance trade-off and the shape of the function

When the true relationship is linear, it is difficult for a non-parametric approach to compete with linear regression because the non-parametric approach incurs a cost in variance that is not offset by a reduction in bias. Additionally, in higher dimensions, K-nearest neighbors regression often performs worse than linear regression. This is often due to combining too small an with too large a , resulting in a given observation having no nearby neighbors. This is often called the curse of dimensionality. In other words, the observations nearest to an observation may be far away from in a -dimensional space when is large, leading to a poor prediction of and a poor K-nearest neighbors regression fit.

As a general rule, parametric models will tend to outperform non-parametric models when there are only a small number of observations per predictor.